Virtual and augmented reality solution provider Lobaki has introduced Lobaki Liaison, an AI-powered teaching assistant designed to help educators navigate and implement VR content in their classrooms.

Microsoft has expanded its AI-powered cybersecurity platform, introducing a suite of autonomous agents to help organizations counter rising threats and manage the growing complexity of cloud and AI security.

Chatbot platform Character.AI has introduced a new Parental Insights feature aimed at giving parents a window into their children's activity on the platform. The feature allows users under 18 to share a weekly report of their chatbot interactions directly with a parent's e-mail address.

Google has launched Gemini 2.5 Pro Experimental, a new artificial intelligence model designed to reason through problems before delivering answers, a shift that marks a major leap in AI capability, according to the company.

AI-powered studying and learning platform Studyfetch has introduced Imagine Explainers, a new video creator that utilizes artificial intelligence to generate 10- to 60-minute explainer videos for any topic.

Recent announcements from Google and Microsoft highlight a slough of new AI capabilities for their search tools.

French AI startup Mistral AI has announced Mistral OCR, an advanced optical character recognition (OCR) API designed to convert printed and scanned documents into digital files with "unprecedented accuracy."

Generative AI is already shaping the future of education, but its true potential is only beginning to unfold.

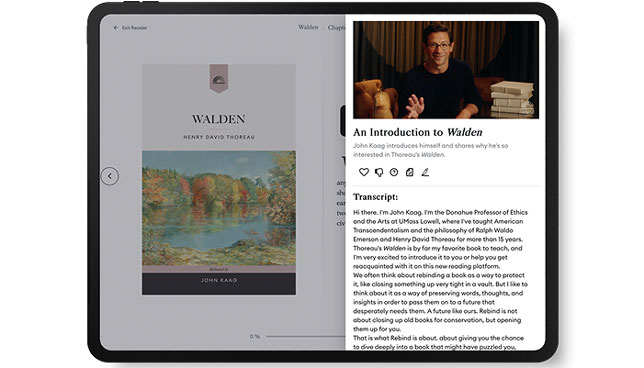

E-reading publishing company Rebind has announced a new "Classics in the Classroom" grant program for United States high school and college educators, providing free access to the company's AI-powered reading platform for the Fall 2025 term.

In a survey of parents with children aged 8 or younger, nearly a third of respondents (29%) said their child has used AI for school-related learning, according to a new report from Common Sense Media.